How AI Can Support Therapists in 2025

As a Mental Health Provider, AI is my best friend. Many people are scared of it, wondering if it possesses those same capacities that we all have, and thus will one day take our jobs!

I don’t think it will, but I do think it can be a very supportive tool when used effectively. In this article, I’ll share some practical ways to use AI to level up your practice.

A therapist and an AI collaborating! Made by ChatGPT’s image generator.

Consultation

Now, this one may be a shocker to start with. I’m going to consult AI for my treatment?? But hang in there, don’t click out yet! AI can be a great tool for clinical consultation. Obviously, your clinical judgment is key here and you have the final say, but don’t be afraid to ask AI a question.

I have had AI give me some super helpful advice about what the signs of abuse are, how its defined, and how it applies to my cases. It can even give feedback on ways to approach this. Now, I will urge caution again that you have to be the one to take a step back and ask yourself if it’s really good advice or not. In my experience, when AI is good it’s really good and when it’s bad it’s really bad.

So, exercise caution, but don’t be afraid to use it to learn, and to reflect on some cases if you don’t have the opportunity to bring it to someone else. That being said, if you want some real mental health provider consultation for free, we do host case consultation calls every Friday at noon Mountain Time. So, pop on by!

Treatment Planning

This is one of those relatively straight-forward use cases for AI. As you know, a lot of insurance companies want “SMART” goals. Ugh, I shudder at the thought! I recognize, of course, why an insurance company wants to see that level of specificity and accountability, and… I still don’t like thinking up a SMART goal.

Ask AI to give you a SMART goal for a client dealing with moderate social anxiety. You’ll be surprised what comes up. Taking it a step further, you can give a high-level overview of your clients case and presenting problems, as well as the format of your treatment plan, and it’ll give you the whole treatment plan. Again, exercise your clinical judgment before putting anything into the chart.

I’ll also say here do not put PHI into ChatGPT! This is a HIPAA violation, because ChatGPT stores that data. So, be doubly sure that everything that you put into ChatGPT is de-identified.

Note Taking

This is the use-case you’ve probably heard all about. I’m not going to go into detail or provide companies at this point in time, because this is not a sales pitch, but there are a lot of companies that provide AI notetakers. These usually listen and record the session, and then give you a note in SOAP, DAP, or whatever format you prefer.

Be careful when using these. There are a few things you’ll want to be sure of:

Make sure to get your client’s consent before recording the session.

Make sure the note taker doesn’t store your data to be used to train models. Many companies will want to use that data to train up AI therapists to try to actually take your job! Make sure they don’t keep the notes or transcripts!

Make sure to double-check all of your notes for accuracy before putting them in your chart. The way AI works, it can ‘hallucinate’ things that weren’t actually said. This is a big liability when you’re dealing with insurance as it could be considered fraud.

Custom Use Cases

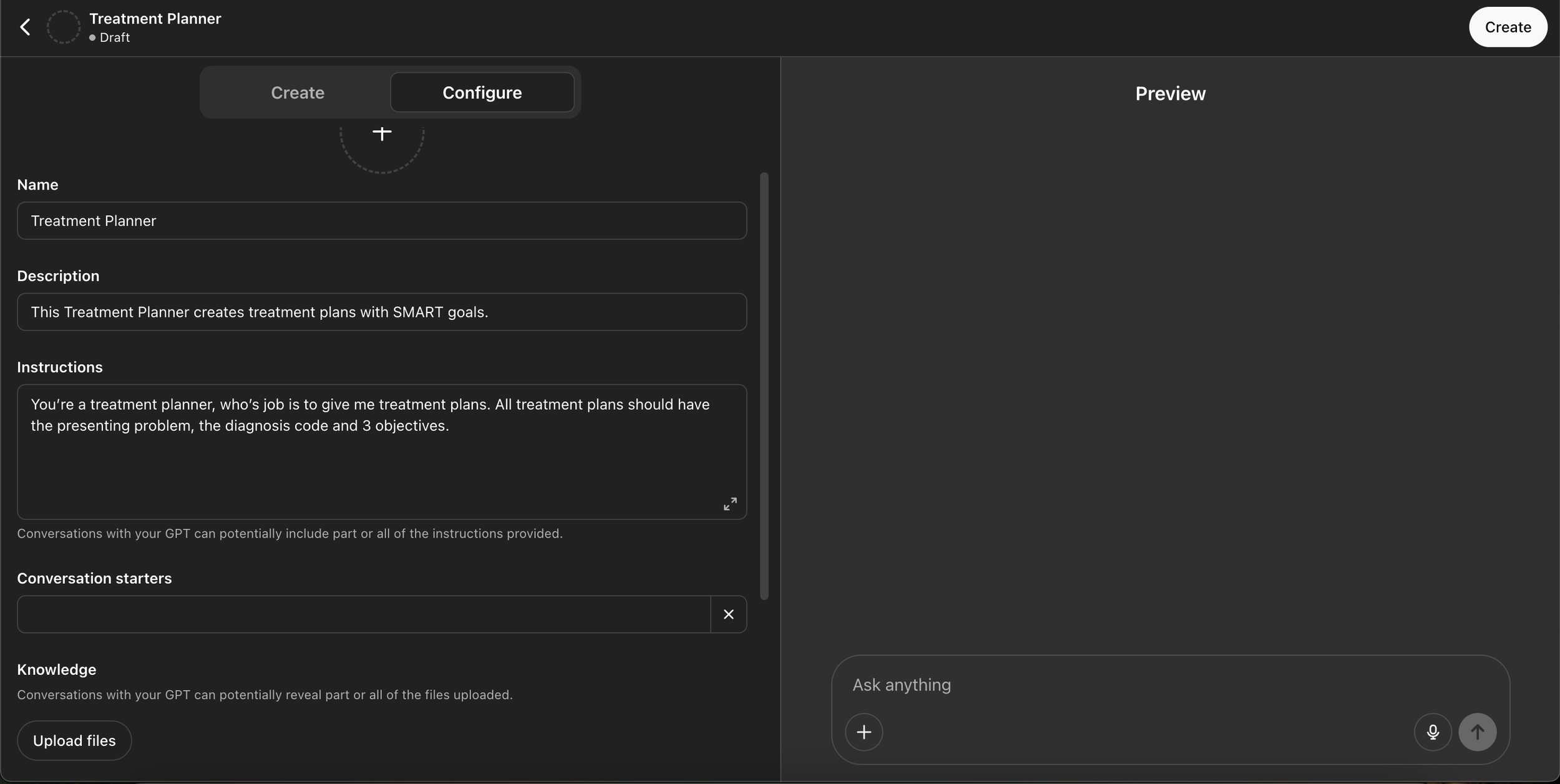

If you’re wanting to get a little more creative, I love to create Custom GPTs in ChatGPT. You’ll have to have the “Plus” version for this, which is $20/month (and totally worth it!). A “Custom GPT” is basically like using ChatGPT with a lot of instructions. They’re generally used when you want to use all of the power of ChatGPT, but have a specific use case.

A Custom GPT allows you to provide instructions, for example you could tell it:

You’re a treatment planner, who’s job is to give me treatment plans. All treatment plans should have the presenting problem, the diagnosis code and 3 objectives.

You can also upload documents to it, as a “Knowledge Base”. For example, if you use DBT a lot, you might upload a DBT slide show and/or training manual, so that it can make sure to include some DBT language in your treatment plans.

Additionally, a good tip is to give it a “template” if you want things to look a certain way. For example, you might ask it to reference your EHR’s template for treatment plans so it comes out in the correct format.

See the screenshot below to see what this process looks like. Note you can also ‘talk’ to it in the chatbot style to tell it what you’d like it to do rather than giving it specific instructions.

The custom GPT interface

The Future of AI in Therapy

I recognize AI is a very sensitive topic for a lot of people. It forces us to consider some big and scary questions. However, I think that by embracing AI we can each really supercharge our practices. My view is that AI will not replace therapy, but it will augment and support it! We will have new tools that open new doors to supporting clinicians and our clients best. While certain companies are trying to replace us, I believe there’s something fundamental to being human that can’t be replicated by the machines. What do you think? Let us know by sending an email to cole@iccpbc.com with your thoughts.

About the Author

Cole Butler, LPCC, ADDC, MACP

Cole Butler, LPCC, ADDC, MACP is a Mental Health Therapist and Writer. He co-founded Integrative Care Collective in 2023 to support mental health providers that are passionate about integrative care and to foster community amongst them. You can learn more about and connect with him on LinkedIn: https://www.linkedin.com/in/cole-butler/